Comparsion of Discrete Random Variable and Continuous Random Variable Pdf

4.1.3 Functions of Continuous Random Variables

If $X$ is a continuous random variable and $Y=g(X)$ is a function of $X$, then $Y$ itself is a random variable. Thus, we should be able to find the CDF and PDF of $Y$. It is usually more straightforward to start from the CDF and then to find the PDF by taking the derivative of the CDF. Note that before differentiating the CDF, we should check that the CDF is continuous. As we will see later, the function of a continuous random variable might be a non-continuous random variable. Let's look at an example.

Example

Let $X$ be a $Uniform(0,1)$ random variable, and let $Y=e^X$.

- Find the CDF of $Y$.

- Find the PDF of $Y$.

- Find $EY$.

- Solution

- First, note that we already know the CDF and PDF of $X$. In particular, \begin{equation} %\label{eq:CDF-uniform} \nonumber F_X(x) = \left\{ \begin{array}{l l} 0 & \quad \textrm{for } x < 0 \\ x & \quad \textrm{for }0 \leq x \leq 1\\ 1 & \quad \textrm{for } x > 1 \end{array} \right. \end{equation} It is a good idea to think about the range of $Y$ before finding the distribution. Since $e^x$ is an increasing function of $x$ and $R_X=[0,1]$, we conclude that $R_Y=[1,e]$. So we immediately know that $$F_Y(y)=P(Y \leq y)=0, \hspace{20pt} \textrm{for } y < 1,$$ $$F_Y(y)=P(Y \leq y)=1, \hspace{20pt} \textrm{for } y \geq e.$$

- To find $F_Y(y)$ for $y \in[1,e]$, we can write

$F_Y(y)$ $=P(Y \leq y)$ $=P(e^X \leq y)$ $=P(X \leq \ln y)$ $\textrm{since $e^x$ is an increasing function}$ $=F_X(\ln y)=\ln y \hspace{30pt}$ $\textrm{since $0 \leq \ln y \leq 1$.}$

To summarize \begin{equation} \nonumber F_Y(y) = \left\{ \begin{array}{l l} 0 & \quad \textrm{for } y < 1 \\ \ln y & \quad \textrm{for }1 \leq y < e\\ 1 & \quad \textrm{for } y \geq e \end{array} \right. \end{equation} - The above CDF is a continuous function, so we can obtain the PDF of $Y$ by taking its derivative. We have \begin{equation} \nonumber f_Y(y)=F'_Y(y) = \left\{ \begin{array}{l l} \frac{1}{y} & \quad \textrm{for }1 \leq y \leq e\\ 0 & \quad \textrm{otherwise} \end{array} \right. \end{equation} Note that the CDF is not technically differentiable at points $1$ and $e$, but as we mentioned earlier we do not worry about this since this is a continuous random variable and changing the PDF at a finite number of points does not change probabilities.

- To find the $EY$, we can directly apply LOTUS,

$E[Y]=E[e^X]$ $=\int_{-\infty}^{\infty} e^x f_X(x) dx$ $=\int_{0}^{1} e^x dx$ $=e-1.$

For this problem, we could also find $EY$ using the PDF of $Y$,$E[Y]$ $=\int_{-\infty}^{\infty} y f_Y(y) dy$ $=\int_{1}^{e} y \frac{1}{y} dy$ $=e-1.$

Note that since we have already found the PDF of $Y$ it did not matter which method we used to find $E[Y]$. However, if the problem only asked for $E[Y]$ without asking for the PDF of $Y$, then using LOTUS would be much easier.

- To find $F_Y(y)$ for $y \in[1,e]$, we can write

- First, note that we already know the CDF and PDF of $X$. In particular, \begin{equation} %\label{eq:CDF-uniform} \nonumber F_X(x) = \left\{ \begin{array}{l l} 0 & \quad \textrm{for } x < 0 \\ x & \quad \textrm{for }0 \leq x \leq 1\\ 1 & \quad \textrm{for } x > 1 \end{array} \right. \end{equation} It is a good idea to think about the range of $Y$ before finding the distribution. Since $e^x$ is an increasing function of $x$ and $R_X=[0,1]$, we conclude that $R_Y=[1,e]$. So we immediately know that $$F_Y(y)=P(Y \leq y)=0, \hspace{20pt} \textrm{for } y < 1,$$ $$F_Y(y)=P(Y \leq y)=1, \hspace{20pt} \textrm{for } y \geq e.$$

Example

Let $X \sim Uniform(-1,1)$ and $Y=X^2$. Find the CDF and PDF of $Y$.

- Solution

- First, we note that $R_Y=[0,1]$. As usual, we start with the CDF. For $y \in [0,1]$, we have

$F_Y(y)$ $=P(Y \leq y)$ $=P(X^2 \leq y)$ $=P(-\sqrt{y} \leq X \leq \sqrt{y})$ $= \frac{\sqrt{y}-(-\sqrt{y})}{1-(-1)} \hspace{80pt} \textrm{since } X \sim Uniform(-1,1)$ $= \sqrt{y}.$

Thus, the CDF of $Y$ is given by \begin{equation} \nonumber F_Y(y)= \left\{ \begin{array}{l l} 0 & \quad \textrm{for } y < 0\\ \sqrt{y} & \quad \textrm{for }0 \leq y \leq 1\\ 1 & \textrm{for } y > 1 \end{array} \right. \end{equation} Note that the CDF is a continuous function of $Y$, so $Y$ is a continuous random variable. Thus, we can find the PDF of $Y$ by differentiating $F_Y(y)$, \begin{equation} \nonumber f_Y(y)=F'_Y(y) = \left\{ \begin{array}{l l} \frac{1}{2\sqrt{y}} & \quad \textrm{for }0 \leq y \leq 1\\ 0 & \quad \textrm{otherwise} \end{array} \right. \end{equation}

- First, we note that $R_Y=[0,1]$. As usual, we start with the CDF. For $y \in [0,1]$, we have

The Method of Transformations

So far, we have discussed how we can find the distribution of a function of a continuous random variable starting from finding the CDF. If we are interested in finding the PDF of $Y=g(X)$, and the function $g$ satisfies some properties, it might be easier to use a method called the method of transformations. Let's start with the case where $g$ is a function satisfying the following properties:

- $g(x)$ is differentiable;

- $g(x)$ is a strictly increasing function, that is, if $x_1 < x_2$, then $g(x_1) < g(x_2)$.

Now, let $X$ be a continuous random variable and $Y=g(X)$. We will show that you can directly find the PDF of $Y$ using the following formula. \begin{equation} \nonumber f_Y(y) = \left\{ \begin{array}{l l} \frac{f_X(x_1)}{g'(x_1)}=f_X(x_1). \frac{dx_1}{dy} & \quad \textrm{where } g(x_1)=y\\ 0 & \quad \textrm{if }g(x)=y \textrm{ does not have a solution} \end{array} \right. \end{equation} Note that since $g$ is strictly increasing, its inverse function $g^{-1}$ is well defined. That is, for each $y \in R_Y$, there exists a unique $x_1$ such that $g(x_1)=y$. We can write $x_1=g^{-1}(y)$.

| $F_Y(y)$ | $=P(Y \leq y)$ |

| $=P(g(X) \leq y)$ | |

| $=P(X < g^{-1}(y)) \hspace{30pt} \textrm{ since $g$ is strictly increasing}$ | |

| $=F_X(g^{-1}(y)).$ |

To find the PDF of $Y$, we differentiate

| $f_Y(y)$ | $=\frac{d}{dy} F_X(x_1) \hspace{60pt}$ | $\textrm{ where } g(x_1)=y$ |

| $=\frac{dx_1}{dy} \cdot F'_X(x_1)$ | ||

| $=f_X(x_1) \frac{dx_1}{dy}$ | ||

| $=\frac{f_X(x_1)}{g'(x_1)}$ | $\textrm{since } \frac{dx}{dy}=\frac{1}{\frac{dy}{dx}}.$ |

We can repeat the same argument for the case where $g$ is strictly decreasing. In that case, $g'(x_1)$ will be negative, so we need to use $|g'(x_1)|$. Thus, we can state the following theorem for a strictly monotonic function. (A function $g: \mathbb{R}\rightarrow \mathbb{R}$ is called strictly monotonic if it is strictly increasing or strictly decreasing.)

Theorem

Suppose that $X$ is a continuous random variable and $g: \mathbb{R}\rightarrow \mathbb{R}$ is a strictly monotonic differentiable function. Let $Y=g(X)$. Then the PDF of $Y$ is given by \begin{equation} \nonumber f_Y(y)= \left\{ \begin{array}{l l} \frac{f_X(x_1)}{|g'(x_1)|}=f_X(x_1). |\frac{dx_1}{dy}| & \quad \textrm{where } g(x_1)=y\\ 0 & \quad \textrm{if }g(x)=y \textrm{ does not have a solution} \end{array} \right. \hspace{10pt} (4.5) \end{equation}

To see how to use the formula, let's look at an example.

Example

Let $X$ be a continuous random variable with PDF \begin{equation} \nonumber f_X(x) = \left\{ \begin{array}{l l} 4x^3& \quad 0 < x \leq 1\\ 0 & \quad \text{otherwise} \end{array} \right. \end{equation} and let $Y=\frac{1}{X}$. Find $f_Y(y)$.

- Solution

- First note that $R_Y=[1,\infty)$. Also, note that $g(x)$ is a strictly decreasing and differentiable function on $(0,1]$, so we may use Equation 4.5. We have $g'(x)=-\frac{1}{x^2}$. For any $y \in [1,\infty)$, $x_1=g^{-1}(y)=\frac{1}{y}$. So, for $y \in [1,\infty)$

$f_Y(y)$ $=\frac{f_X(x_1)}{|g'(x_1)|}$ $= \frac{4 x_1^3}{|-\frac{1}{x_1^2}|}$ $= 4x_1^5$ $=\frac{4}{y^5}.$

Thus, we conclude \begin{equation} \nonumber f_Y(y) = \left\{ \begin{array}{l l} \frac{4}{y^5}& \quad y \geq 1\\ 0 & \quad \text{otherwise} \end{array} \right. \end{equation}

- First note that $R_Y=[1,\infty)$. Also, note that $g(x)$ is a strictly decreasing and differentiable function on $(0,1]$, so we may use Equation 4.5. We have $g'(x)=-\frac{1}{x^2}$. For any $y \in [1,\infty)$, $x_1=g^{-1}(y)=\frac{1}{y}$. So, for $y \in [1,\infty)$

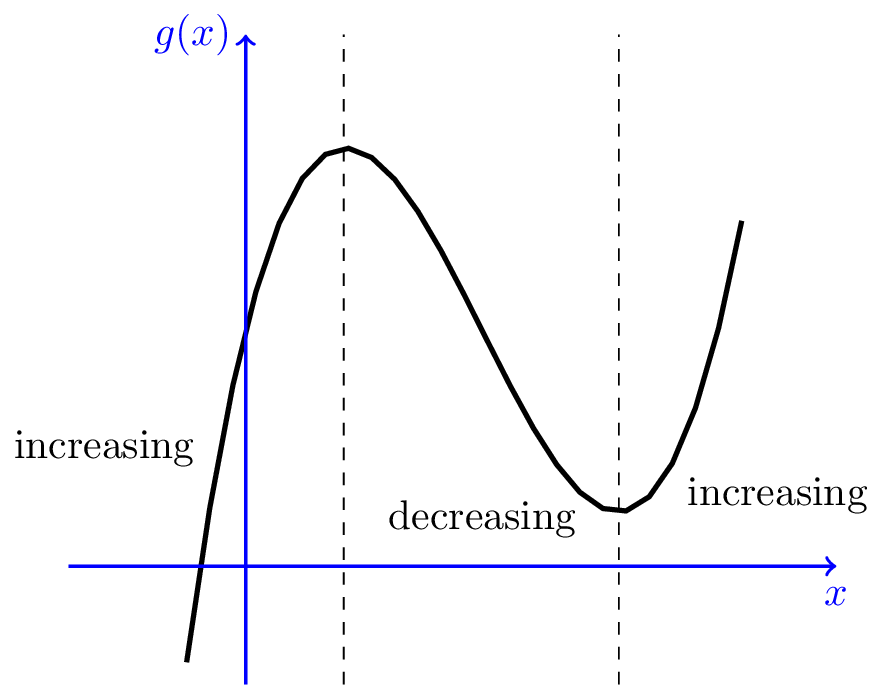

Theorem 4.1 can be extended to a more general case. In particular, if $g$ is not monotonic, we can usually divide it into a finite number of monotonic differentiable functions. Figure 4.3 shows a function $g$ that has been divided into monotonic parts. We may state a more general form of Theorem 4.1.

Theorem

Consider a continuous random variable $X$ with domain $R_X$, and let $Y=g(X)$. Suppose that we can partition $R_X$ into a finite number of intervals such that $g(x)$ is strictly monotone and differentiable on each partition. Then the PDF of $Y$ is given by $$\hspace{40pt} f_Y(y)= \sum_{i=1}^{n} \frac{f_X(x_i)}{|g'(x_i)|}= \sum_{i=1}^{n} f_X(x_i). \left|\frac{dx_i}{dy}\right| \hspace{40pt} (4.6)$$ where $x_1, x_2,...,x_n$ are real solutions to $g(x)=y$.

Let us look at an example to see how we can use Theorem 4.2.

Example

Let $X$ be a continuous random variable with PDF $$f_X(x) = \frac{1}{\sqrt{2 \pi}} e^{-\frac{x^2}{2}}, \hspace{20pt} \textrm{for all } x \in \mathbb{R}$$ and let $Y=X^2$. Find $f_Y(y)$.

- Solution

- We note that the function $g(x)=x^2$ is strictly decreasing on the interval $(-\infty,0)$, strictly increasing on the interval $(0, \infty)$, and differentiable on both intervals, $g'(x)=2x$. Thus, we can use Equation 4.6. First, note that $R_Y=(0, \infty)$. Next, for any $y \in (0,\infty)$ we have two solutions for $y=g(x)$, in particular, $$x_1=\sqrt{y}, \hspace{10pt} x_2=-\sqrt{y}$$ Note that although $0 \in R_X$ it has not been included in our partition of $R_X$. This is not a problem, since $P(X=0)=0$. Indeed, in the statement of Theorem 4.2, we could replace $R_X$ by $R_X-A$, where $A$ is any set for which $P(X \in A)=0$. In particular, this is convenient when we exclude the endpoints of the intervals. Thus, we have

$f_Y(y)$ $= \frac{f_X(x_1)}{|g'(x_1)|}+\frac{f_X(x_2)}{|g'(x_2)|}$ $= \frac{f_X(\sqrt{y})}{|2\sqrt{y}|}+\frac{f_X(-\sqrt{y})}{|-2\sqrt{y}|}$ $= \frac{1}{2\sqrt{2 \pi y}} e^{-\frac{y}{2}}+\frac{1}{2\sqrt{2 \pi y}} e^{-\frac{y}{2}}$ $= \frac{1}{\sqrt{2 \pi y}} e^{-\frac{y}{2}}, \textrm{ for } y \in (0,\infty).$

- We note that the function $g(x)=x^2$ is strictly decreasing on the interval $(-\infty,0)$, strictly increasing on the interval $(0, \infty)$, and differentiable on both intervals, $g'(x)=2x$. Thus, we can use Equation 4.6. First, note that $R_Y=(0, \infty)$. Next, for any $y \in (0,\infty)$ we have two solutions for $y=g(x)$, in particular, $$x_1=\sqrt{y}, \hspace{10pt} x_2=-\sqrt{y}$$ Note that although $0 \in R_X$ it has not been included in our partition of $R_X$. This is not a problem, since $P(X=0)=0$. Indeed, in the statement of Theorem 4.2, we could replace $R_X$ by $R_X-A$, where $A$ is any set for which $P(X \in A)=0$. In particular, this is convenient when we exclude the endpoints of the intervals. Thus, we have

The print version of the book is available through Amazon here.

jackmanunshes1939.blogspot.com

Source: https://www.probabilitycourse.com/chapter4/4_1_3_functions_continuous_var.php

Postar um comentário for "Comparsion of Discrete Random Variable and Continuous Random Variable Pdf"